The field of image generation is moving fast. While the diffusion models used by popular tools like Midjourney and Stable Diffusion may seem like the best we’ve got, the next thing is always coming – and OpenAI may have hit it with “consistency models”, which can already perform simple tasks and order of magnitude faster than that of DALL-E.

The paper was put online as a preprint last month and was not accompanied by the subdued fanfare of OpenAI reservations for its major releases. That’s no surprise: this is definitely just a research paper, and it’s very technical. But the results of this early and experimental technique are interesting enough to note.

Consistency models are not particularly easy to explain but make more sense in contrast to diffusion models.

In diffusion, a model learns how to gradually subtract noise from an all-noise starting image, moving it step by step closer to the target prompt. This approach has enabled some of the most impressive AI visuals today, but essentially it relies on running tens to thousands of steps to get good results. That means it’s expensive to run and so slow that real-time applications are impractical.

The goal of consistency models was to create something that produced decent results in a single calculation step, or two at the most. To do this, the model is trained, like a diffusion model, to observe the image destruction process, but learns to take an image at any level of obscuration (i.e. with a little or a lot of information) and generate a complete source image in just one step.

But I hasten to add that this is only the most hand-waving description of what is happening. It’s this kind of paper:

A representative excerpt from the consistency document.

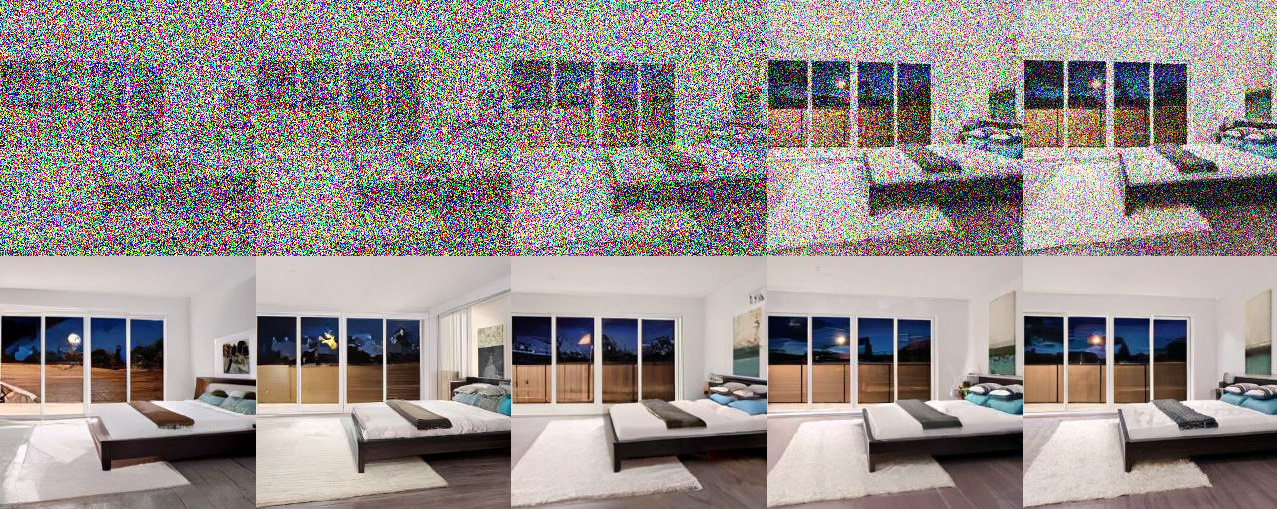

The resulting images aren’t stunning – many of the images can hardly even be called good. But what matters is that they were generated in one step instead of a hundred or a thousand. In addition, the consistency model is generalized to various tasks such as coloring, scaling, sketch interpretation, fill, etc., also with a single step (although often improved by a second).

Whether the image is mostly noise or mostly data, consistency models go straight to a final result.

This matters primarily because the pattern in machine learning research is generally that someone develops a technique, someone else finds a way to make it work better, and others fine-tune it over time as they add calculations to produce dramatically better results than you started with. That’s how we more or less ended up with both modern diffusion models and ChatGPT. This is a self-limiting process because, in practice, you can only dedicate so many computations to a given task.

What happens next is that a new, more efficient technique is identified that can do what the previous model did, much worse to begin with but also much more efficiently. Consistency models show this, although it is still early enough that they cannot be directly compared to diffusion models.

But it matters on another level, because it shows how OpenAI, by far the most influential AI research organization in the world right now, is actively looking beyond diffusion to the next generation of use cases.

Yes, if you want to do 1500 iterations in a minute or two with a cluster of GPUs, you can get amazing results with diffusion models. But what if you want to run an image generator on someone’s phone without draining the battery, or provide ultra-fast results in, say, a live chat interface? Diffusion is simply the wrong tool for the job, and OpenAI’s researchers are actively looking for the right one – including Ilya Sutskever, a well-known name in the field, to add to the contributions of the other authors, Yang Song, Prafulla Dhariwal, and Mark Chen. .

Whether consistency models are the next big step for OpenAI or just another arrow in its quiver — the future is almost certainly both multimodal and multimodel — will depend on how the research plays out. I’ve asked for more details and will update this post if I hear back from the investigators.